UP Board Model Paper 2023-24: यूपी बोर्ड ने 2023-24 के लिए मॉडल पेपर जारी किए हैं। पंजीकृत छात्र आधिकारिक वेबसाइट से इन्हें डाउनलोड कर सकते हैं। उत्तर प्रदेश माध्यमिक शिक्षा परिषद ने यह निर्णय लिया है। यह पेपर 10वीं और 12वीं कक्षा के छात्रों के लिए हैं। छात्रों को यह तैयारी में मदद करने के लिए जारी किए गए हैं। यह विद्यार्थियों को परीक्षा की तैयारी में संबंधित करणे में मदद करेंगे। छात्रों को अध्ययन सामग्री व सवाल-उत्तर का अभ्यास करने का अवसर मिलेगा।

UP Board Model Paper 2023-24

- यूपी बोर्ड ने 2023-24 के लिए मॉडल पेपर जारी किए हैं।

- परीक्षा के लिए पंजीकृत अभ्यर्थी आधिकारिक वेबसाइट से इसे डाउनलोड कर सकते हैं।

- बोर्ड ने कक्षा 9, 10, 11 और 12 के लिए मॉडल पेपर जारी किए हैं।

- उत्तर प्रदेश माध्यमिक शिक्षा परिषद ने यह नवाचार किया है।

- अभ्यर्थी आधिकारिक वेबसाइट upmsp.edu.in पर जाकर मॉडल पेपर डाउनलोड कर सकते हैं।

- यह एक उत्तरादानकर्ता के लिए सुविधाजनक नई विकल्प है।

- इससे छात्रों को परीक्षा की तैयारी में मदद मिलेगी।

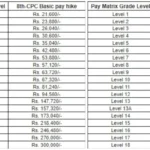

यूपी बोर्ड 12वीं की परीक्षा में दो खंड होंगे। पेपर कुल 100 अंकों का होगा और तीन घंटे 15 मिनट का समय दिया जाएगा। शुरुआती 15 मिनट में अभ्यर्थियों को पेपर को पढ़ने का समय दिया जाएगा। यह परीक्षा अभ्यर्थियों के लिए महत्वपूर्ण है और ध्यान से तैयारी करनी चाहिए। प्रत्येक खंड के लिए विशिष्ट तैयारी की आवश्यकता होगी। समय को अच्छे से बनाए रखने के लिए संगठित रहना महत्वपूर्ण है। सफलता प्राप्त करने के लिए नियमित अभ्यास और आत्म-मॉनिटरिंग महत्वपूर्ण हैं।

UP Board 2023 Exam Admit Card इस दिन जारी होगी एडमिट कार्ड

यूपी बोर्ड की तरफ से जारी मॉडल पेपर

- यूपी बोर्ड ने जारी किया मॉडल पेपर, कक्षा 10वीं के लिए खंड ‘अ’ में बहुविकल्पीय प्रश्न।

- इसमें 20 सवाल होंगे और छात्रों को ओएमआर शीट भी उपलब्ध कराई जाएगी।

- यूपी बोर्ड ओएमआर शीट के सवालों का विवेकपूर्ण जवाब देना आवश्यक होगा।

- बहुविकल्पीय प्रश्नों में प्रत्येक प्रश्न के लिए एक अंक का मूल्य होगा।

- खंड अ में कुल 20 अंक होंगे, जबकि खंड ब में 50 अंक होंगे।

- छात्रों को यह निर्देश दिया जाता है कि वे ओएमआर शीट वाले सवालों का खास ध्यान दें।

- यूपी बोर्ड ने कक्षा 10 के छात्रों के लिए पेपर पैटर्न को बताने के लिए यह विवरण जारी किया है।

| Whatsapp Channel | Join |

| Telegram Channel | Click Here |

| Homepage | Click Here |