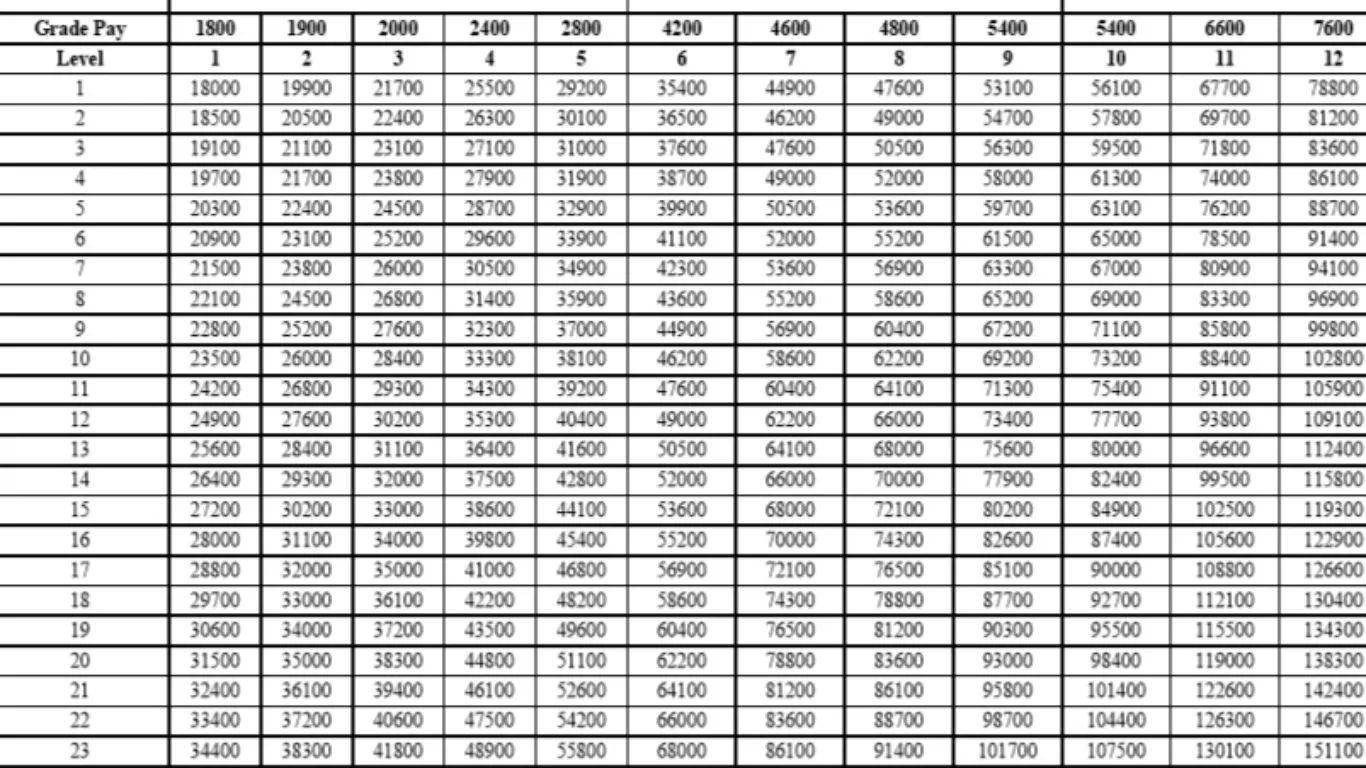

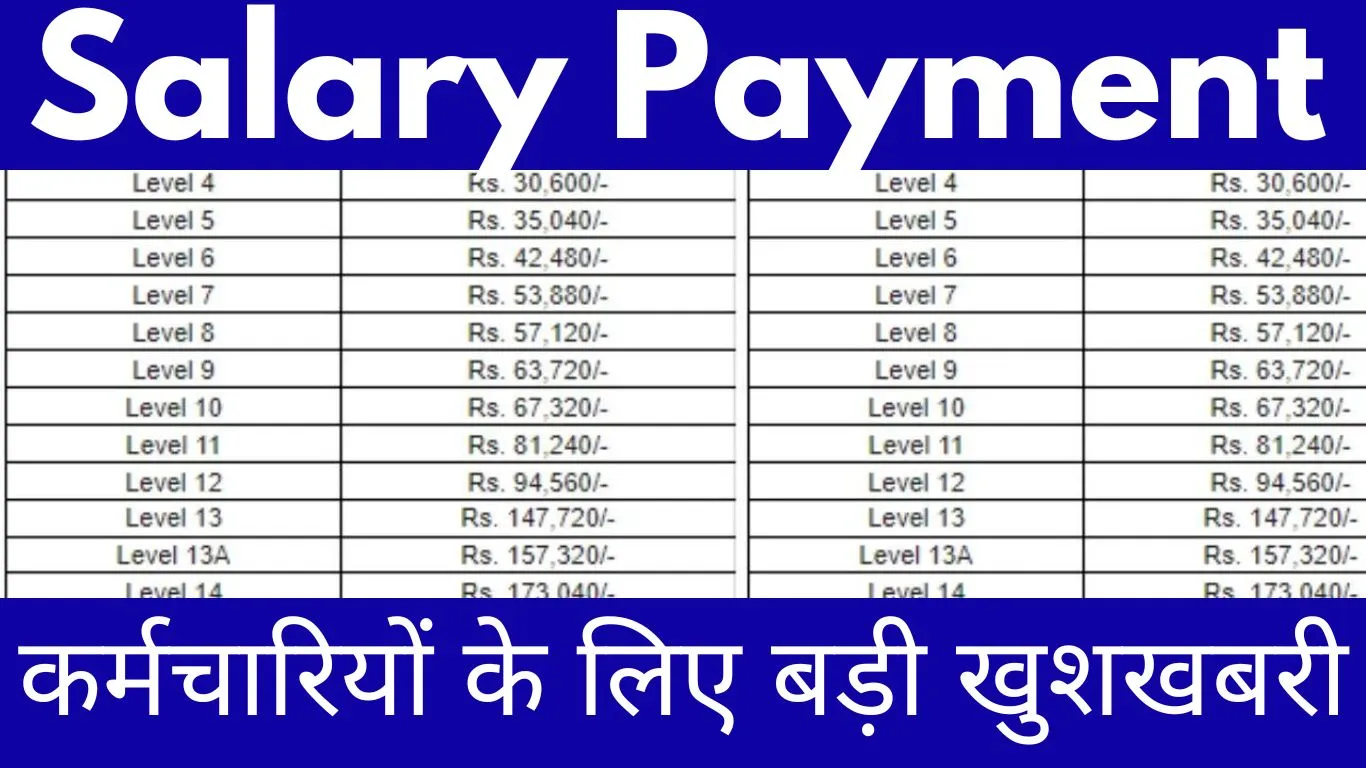

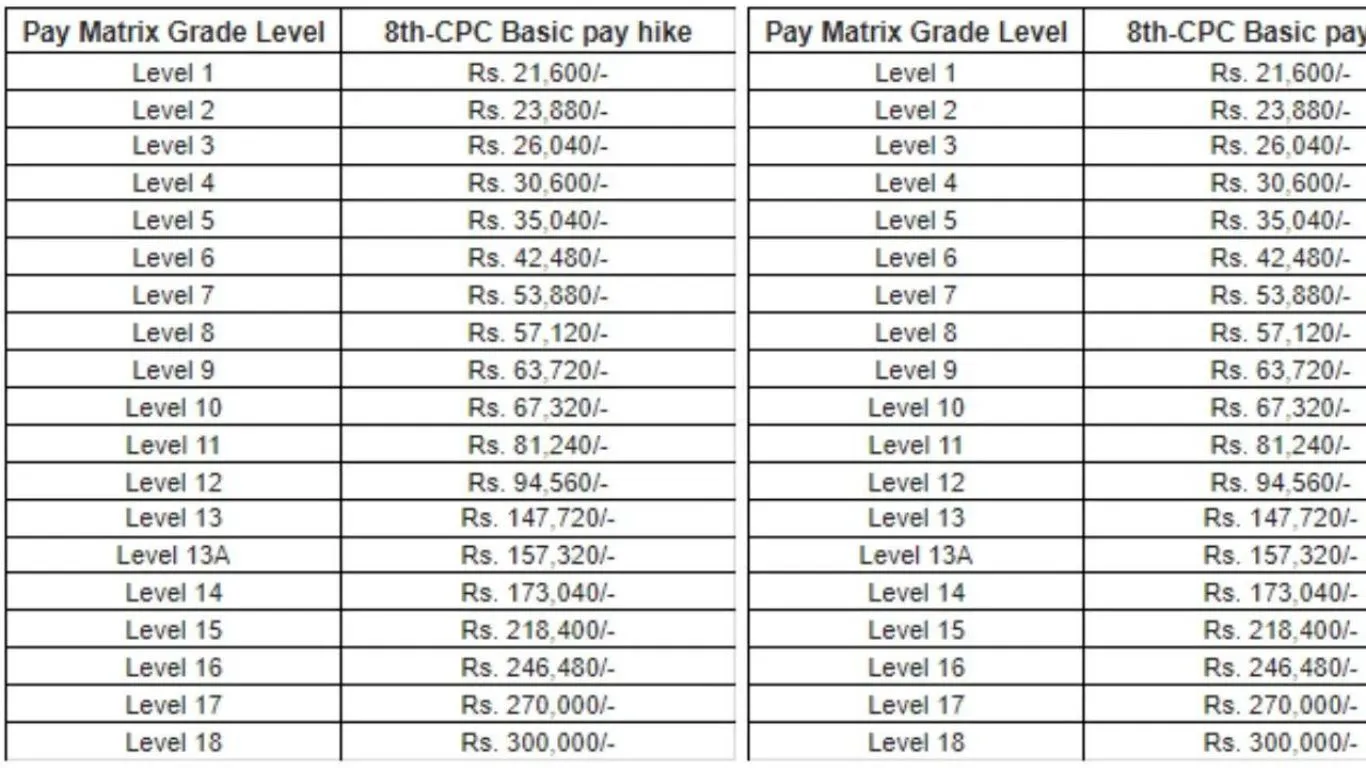

वेतन भुगतान 2024: सरकारी कर्मचारियों के वेतन का भुगतान 2024 में होगा। वेतन में वृद्धि का संभावनाओं का अध्ययन हो रहा है। केंद्र सरकार ने वेतन स्तर को निर्धारित किया है। नई वेतनमान स्केल की घोषणा की जाएगी। कर्मचारियों को समय पर वेतन देने का प्रावधान है।

हर महीने की 1 तारीख को वेतन भुगतान

केंद्रीय सरकार हर महीने की पहली तारीख को वेतन भुगतान करती है। यह सरकारी कर्मचारियों के लिए नियमित रूप से होता है।

- वेतन भुगतान बैंक या डायरेक्ट ट्रांसफर के माध्यम से किया जाता है।

- इसका उद्देश्य कर्मचारियों की आर्थिक सुरक्षा सुनिश्चित करना है।

- यह नियमित और समय पर भुगतान का प्रणाली है।

- इससे कर्मचारियों की आर्थिक स्थिति मजबूत होती है।

- यह स्थिति में उन्हें विश्वास और सुरक्षा का अनुभव होता है।

- सरकारी नौकरी में इस तरह की वेतन व्यवस्था सामाजिक सुरक्षा का एक साधन होती है।

- यह भुगतान कर्मचारियों के उत्साह और परिश्रम को प्रोत्साहित करता है।

- समय पर वेतन भुगतान का तंत्र संवेदनशीलता और पारदर्शिता का प्रतीक है।

- इससे कर्मचारियों का समर्थन और सरकारी संगठन में निर्माण होता है।

- यह सुनिश्चित करता है कि सरकारी कर्मचारी अपने कार्य को समर्पित और संवेदनशीलता से निभाएं।

विभाग की तरफ से आदेश

- सभी कर्मचारियों को सुरक्षित रहने के लिए निर्देश जारी किए गए हैं।

- अपने काम स्थल पर मास्क पहनना अनिवार्य है।

- सामूहिक सभाओं की व्यवस्था बंद कर दी गई है।

- कर्मचारियों को समय-समय पर हाथों को धोने का सुझाव दिया गया है।

- नियमित अंतराल में साफ-सफाई की जानी चाहिए।

- विभागीय कार्यों को ऑनलाइन माध्यम से संचालित किया जाएगा।

- किसी भी संक्रमण के संदेश को तत्काल सूचित किया जाना चाहिए।

- कर्मचारियों को उनकी स्वास्थ्य का ध्यान रखने के लिए सलाह दी जाती है।

- कोविड-१९ संबंधित अपडेट्स पर नियमित रूप से जानकारी दी जाएगी।

- विभाग की सुरक्षा नीतियों का पालन सभी कर्मचारियों के लिए अनिवार्य है।

- इस आदेश का पालन करने के लिए सख्ती से पालन किया जाना चाहिए।

सरकारी कर्मचारियों को ‘फाइव डे वर्किंग’ का गिफ्ट देगी राज्य सरकार, DA में भी होगी बढ़ोतरी

DA Hike: कर्मचारियों की सैलरी में होगी बढ़ोतरी, फिर बढ़ेगा महंगाई भत्ता, सरकार खोलेगी खुशी का पिटारा

वेतन में विलंब

सरकारी कर्मचारियों का वेतन में विलंब आया है। इसकी वजह संघर्ष की अवधि है। विलंब से वेतन की मांग बढ़ गई है। कर्मचारियों का धरना-प्रदर्शन चल रहा है। समस्या का हल खोजने में सरकार नकाम रही। सार्वजनिक ट्रांसपोर्ट में असुविधा हो रही है। कर्मचारियों ने आंदोलन का समर्थन किया है।

वेतन विलंब की वजह से ग्रामीण क्षेत्रों में परेशानी हो रही है। सरकार को समस्या को शीघ्र हल करना चाहिए। समाधान के लिए सरकार और कर्मचारियों के बीच बातचीत करनी चाहिए। नागरिकों की सेवा में असुविधाएं हो रही हैं, जो तत्काल हल की जानी चाहिए।

| Whatsapp Channel | Join |

| Telegram Channel | Click Here |

| Homepage | Click Here |